Impacts of AI on the insurance industry and related risks

Artificial Intelligence (AI) is rapidly transforming the insurance industry worldwide, and Australia is no exception. As the insurance industry increasingly adopts AI to enhance operational efficiency, improve customer service, and innovate product offerings, the need for robust compliance frameworks becomes paramount. Ensuring that AI applications adhere to regulatory standards and ethical guidelines is critical to maintaining trust, fairness, and transparency in the insurance sector. Here we explore the implications of the insurance industry’s adoption of AI and highlight compliance considerations.

Insurance industry adoption

Leading industry sources have reported on the powerful AI applications that are available for the insurance industry and the likelihood that they will force innovation in many areas. At Bellrock we acknowledge these changes and are continuously investing in our technology platforms to assist in providing a streamlined service to our customers. We do, however, remain cautious as regards the role of AI in our customer servicing and the impacts it may have on their coverage.

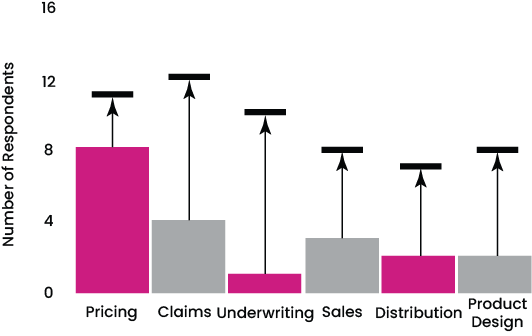

Holistically, the insurance industry continues to grow its understanding of AI and its implications. The Australian Prudential Regulation Authority’s (APRA) 2020 Insurtech survey highlighted the growing appetite for AI investment locally with many of the thirty six APRA authorised general insurers surveyed planning to increase investment in AI

across all six elements of the insurance value chain from 2020 to 2023.

Source: APRA 2020 Insurtech Survey

Since this survey, it is understood that insurers are utilising AI in some aspects of their processes, as outlined in this article. However uptake is not consistent across the industry, likely driven by the lack of clarity around the full capability of AI technologies and their true reliability. Many stakeholders in the insurance industry lack the ability to innovate, largely impacted by compliance implications, and the leap in investment and change management that would be required for some companies to shift their reliance from legacy systems. For example, Lloyd’s of London Blueprint Two, the plan to digitalise the Lloyd’s market, was announced five years ago and was intended to have phase two launched in April 2025. However, the implementation of Lloyd’s digitalisation has continuously been delayed, with current release of phase one expected in 2025.

Whilst adoption levels vary across all areas of the insurance industry, AI is being leveraged across various facets, including underwriting, claims processing, fraud detection, and customer service. Key applications include:

Traditional underwriting processes often rely on manual evaluation of limited data, which can result in inaccuracies and inefficiencies. AI has the ability to analyse vast datasets and provide more accurate risk assessments, leading to personalised policy pricing.

Automated systems speed up claims handling and improve accuracy, leading to quicker settlements.

Fraud is a significant concern in the insurance industry, costing billions of dollars annually which, in turn, impacts premiums. AI is playing a crucial role in combating fraud through advanced data analysis and pattern recognition. Insurers are increasingly adopting AI driven fraud detection systems to enhance their capabilities in identifying and preventing fraudulent activities. This includes identifying AI created imagery.These systems can quickly analyse vast amounts of data, cross-referencing claims with various databases to detect inconsistencies and anomalies that might indicate fraud.

AI-powered chatbots and virtual assistants offer 24/7 support, enhancing customer engagement and satisfaction. These AI tools are designed to assist with a wide range of inquiries, from answering basic questions to processing claims, thereby reducing wait times with an aim to improve customer satisfaction.

The above applications are growing in their capabilities but are not without their challenges. Claims are often emotional for insureds and an AI bot cannot advocate for a client in the way their advisor can. AI lacks the subjectivity that is often required in claims assessment. For example, AI ‘s application to a motor claim requires less human oversight than that of a Directors’ & Officers’ liability claim which is generally far more nuanced.

AI remains limited by the data to which it has access. It lacks the knowledge that an experienced insurance professional can apply. Whilst we may be able to utilise AI to assist with disclosures, in particular with analysis of data, completely relying on its use to place complex insurance products for mid-market and large enterprises is fraught with risk.

Compliance challenges

While AI applications offer significant benefits, they also introduce new challenges related to compliance, data privacy, and ethical considerations:

- Data privacy: AI systems often process large volumes of personal data, raising concerns about data privacy and security. Compliance with the Privacy Act 1988 and the Australian Privacy Principles (APPs) is essential to protect customers' information.

- Fairness and non-discrimination: AI algorithms must be designed to avoid bias that could lead to unfair treatment of certain customer groups. Ensuring that AI decision-making processes are transparent and equitable is critical.

- Transparency: Regulators require insurers to explain how AI systems make decisions, especially in areas like underwriting and claims processing. Transparent AI models help build trust with customers and regulatory bodies.

Ethical issues around AI decision-making and the absence of robust regulation are prominent concerns. Establishing clear accountability for AI-driven decisions is crucial. Insurers need to ensure that there is human oversight and intervention in AI processes when necessary.

Insurers and intermediaries must implement robust governance frameworks to manage AI models throughout their lifecycle, including development, deployment, and monitoring. Ongoing monitoring and auditing of AI systems are necessary to ensure they remain compliant with regulatory standards and ethical guidelines.

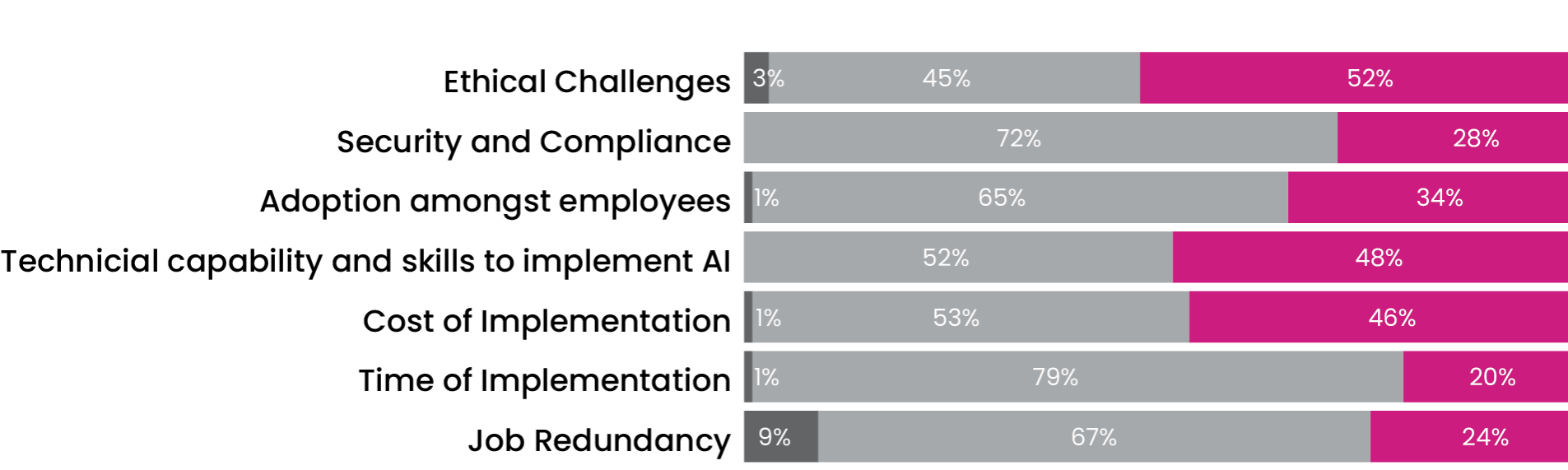

Australian legislation is lagging behind technological innovation. With the very public failures of AI making front-page news, it is no wonder there is a reluctance among certain sectors to adopt AI before clear regulatory “guard rails” are implemented. According to Steadfast’s Artificial Intelligence whitepaper published in July 2024, the two challenges their network brokers were most concerned about were regulatory and compliance concerns and, security or privacy concerns. Steadfast’s findings are in line with KPMG 2023 insurance CEO outlook report indicating ethical challenges and lack of regulation as the most highly challenging aspects of generative AI implementation:

Biggest challenges for genAI implementation

Source: KPMG International “KPMG 2023 Insurance CEO Outlook”

Best practice for AI compliance in the insurance industry

We consider that as the insurance industry continues to adopt AI, the below compliance best practices must inform this adoption:

Implement strong data governance policies to ensure data quality, privacy, and security. Regularly review and update these policies to comply with evolving regulations.

Develop AI models that are interpretable and can provide clear explanations for their decisions. This transparency is crucial for regulatory compliance and customer trust.

Ensure that AI systems have human oversight to validate and intervene in critical decision making processes. This helps maintain accountability and addresses any unintended consequences of AI-driven decisions.

Engage with regulators, industry bodies, and customers to understand their concerns and expectations regarding AI use. This collaborative approach can inform better compliance strategies and build trust.

Coverage and disclosure implications

The understanding of AI capability is increasing, so too is the understanding of the impacts AI may have on insurance coverage. Examples of potential claim triggers that AI could have on insurance are included:

- Cyber liability could be triggered where training or use of AI results in privacy breaches or digital threats

- Errors & Omissions policies may be triggered where the use of AI results in algorithmic bias and system failures

- Directors’ & Officers’ Liability could see claims result where the deployment (or even lack of deployment) of AI in a company leads to mismanagement in company processes

- General liability policies may be triggered if the services or products using AI result in injury to others

- Workers’ compensation claim may result from an AI-controlled industrial robot injuring an employee

- Intellectual property policies could be triggered if the use of GenAI leads to the circulation of pictures that are too similar to copyrighted materials, or the training data used falls under IP protection

- Product liability claims could be sought by third parties if they are harmed due to defective AI or AI malfunctions

- Employment practices liability could be triggered if AI makes biased choices in employment and is deemed to be discriminatory

- Medical malpractice insurance could be triggered if an AI tool used in the medical field leads to an incorrect diagnosis.

Whilst, we have not yet seen insurers place explicit exclusions on policies in relation to AI, the above are examples only of the types of claims that AI could lead to, specific circumstances of a claim and the policy wording to which they are written are the determining factor as to whether it is payable under a policy. We caution the use of AI and advise our customers to ensure they have considered the compliance and privacy challenges of using the technology.

Bellrock previously advised caution regarding online insurance transacting in our August 2023 article. Our statements stand that policyholders should remain cautious as regards their reliance on AI to inform their disclosures and critical decision making. However, as understanding of AI’s capabilities increase, it may be that adoption could assist to ensure accuracy of disclosures by insureds. AI presently relies on the limited data to which it has access, and while the technology continues to evolve, human oversight is vital.

Conclusion

The integration of AI in the insurance industry offers significant opportunities for innovation and efficiency. However, ensuring compliance with regulatory and ethical standards is essential to harness AI’s potential responsibly. By prioritising transparency, fairness, and accountability, our view is that the customers and regulators are likely to build trust, potentially driving sustainable growth and a competitive advantage in an increasingly AI-driven landscape. As AI technology evolves, continuous adaptation and proactive compliance will be key to the insurance industry’s success and the challenge for insurers will be to balance technological advancements with the need to maintain trust and transparency with their customers.

Notwithstanding the investment that Bellrock has made in bespoke technology, including the potential use of AI, we pride ourselves on providing personal service to our clients. Technology complements advice and advocacy: it does not drive it. Our technology investments are focused on improving client service. The systems Bellrock use ensure that our investment in technology benefits our clients through efficiencies, providing our experts with more time to provide personalised service and advice to our clients. We look forward to the insurance industry continuing to innovate its use of technology and the enhancements it may make to our customer servicing.